The search landscape just split in two. New research shows Google’s AI Overviews and large language models cite very different sources. Only a small overlap of domains appears in both. If your authority footprint is one-dimensional, you’ll win in one system and vanish in the other.

This isn’t just another “optimise your FAQs” moment. AI media licensing is redrawing the map. Search Engine Land’s investigation into AI media partnerships and brand visibility shows that publisher networks that partner with AI platforms become trusted inputs for training and answer pipelines. If your brand and experts don’t show up in those ecosystems, you’ll fight uphill for citations in generative results.

7.2% Overlap: What the Data Actually Shows

A study across 8,090 keywords and 25 verticals compared citations in Google’s AI Overviews vs. LLM responses (GPT, Claude, Gemini). The overlap? Only 7.2% of domains appeared in both. Of 22,410 unique domains, 70.7% were exclusive to AI Overviews, 22.1% exclusive to LLMs, and a mere 7.2% were shared. That’s a signal: authority is being measured in two different ways.

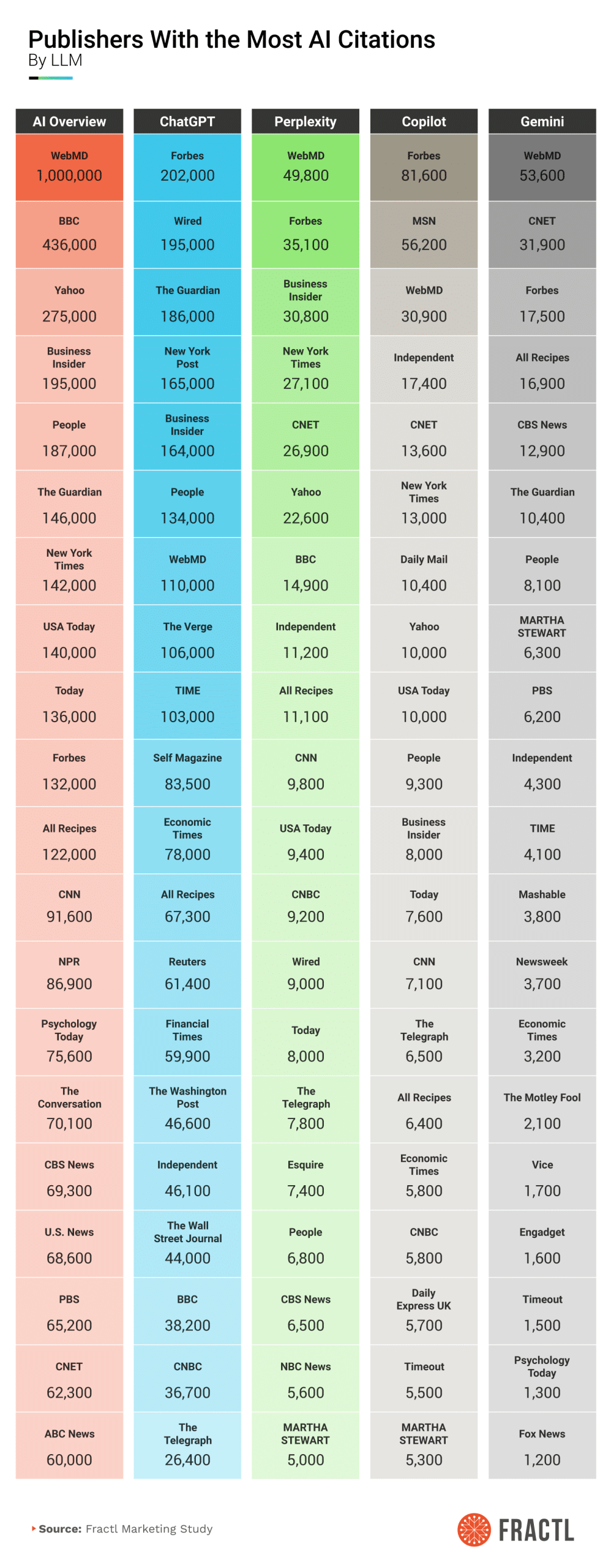

Google’s AI Overviews still favour the “old guard” of high-authority news and reference sites, plus .gov and .edu. LLMs, meanwhile, skew to investigative reporting, vertical specialists (Edmunds, Investopedia, AllRecipes, Wired), educational platforms (Reddit, GitHub, Coursera), and authoritative data portals like journals and standards. Translation: topic depth, clarity, and structure matter as much as traditional DA.

| Area | Google AI Overviews | LLM Answers |

| Who gets cited most | Skews to established, high-authority sources:

|

Skews to:

|

| What’s weighted | Leans toward the “old guard” authority already dominant in SERPs. | Prioritises depth, educational value, and conceptual clarity over traditional web authority signals. |

| Off-site focus | Earn coverage with high-authority news/information and institutional sources AIO cites frequently. | Earn coverage with investigative outlets, vertical specialists, educational platforms, and data portals that LLMs cite more often. |

Which Sources LLMs Prefer and Why That Matters

AI media partnerships shift three levers that influence whether your brand is cited:

- Coverage: How much archive content an AI can legally crawl and reuse.

- Context: How often those sources appear in pretraining corpora, retrieval indexes, eval sets, and safety systems.

- Credibility: The weight assigned to those sources at answer time.

When a publisher network becomes a partner, it isn’t just “another site.” It becomes a landmark in the model’s knowledge graph. Over time, those landmarks shape which stories get cited, repeated, and remembered.

What This Means For Your SEO & PR Stack

You need a two-pronged SEO strategy: structured, expert content on-site and deliberate off-site authority built through targeted media coverage in the publisher networks AI systems rely on. Organic reach from “helpful content” alone won’t carry you across both ecosystems.

Standardise Machine-Friendly Content

Models learn from patterns. Use layouts that are easy to parse and reuse:

- Definition blocks for core terms

- Step-by-step how-tos

- Comparison tables

- Ordered lists

- Clear H2/H3 scaffolding + schema, FAQs, and TL;DR (too long; didn’t read) summaries

Make your content skimmable for humans and predictable for models. Build templates and enforce them in your CMS (Content Management System).

Build Vertical Depth, Not Just Breadth

LLMs reward niche expertise that saturates a topic. Aim to become the definitive source in your category:

- Publish dense, structured explainers and buyer’s guides for your vertical

- Map subtopics and keep them fresh with incremental updates

- Attach named experts to pillar pages and bylines

Think “when it’s {your topic}, go to {your brand}.”

Engineer Repetition and Syndication

Statistical gravity comes from repetition. One Associated Press (AP) pickup can become hundreds of local clones. Provide assets that beg to be reused:

- Embeddable charts with copy-and-paste code

- Stat bullets with clean attributions

- Data stories designed for wire distribution and trade pickup

This increases how often your phrasing is crawled and reused by models.

Target the Right Publisher Networks

Treat media outreach like keyword targeting. Prioritise the conglomerates and titles most cited within your niche, the ones more likely to influence model training and retrieval. Maintain a target list by vertical and update it quarterly as partnerships evolve.

Earn Editorial Signals Models Trust

Strong editorial standards and human fact-checking get re-crawled more often, becoming the “default language” models lean on. Pitch original research, expert commentary, and contrarian explainers to high-standard newsrooms. Keep your newsroom page and press kit updated to reduce friction for editors.

Balance Reddit Reality With Provenance

Yes, UGC (User-Generated Content) communities drive a lot of citations today. But they are vulnerable to contamination and synthetic noise. As provenance weighting increases, expect a credibility correction. Hedge now: earn coverage in high-verifiability outlets while maintaining community presence for discovery and feedback.

Refresh Cadence to Trigger Re-Crawls

Freshness drives re-crawl frequency. Set Service-Level Agreements for updates to pillar pages, stats, and definitions. Publish date-stamped deltas rather than silent edits. Repitch updated findings to priority publishers to reinforce your patterns in the training diet.

How to Win Brand Visibility in GenAI Answers

-

- Audit your dual visibility. For your top 50 queries, sample AI Overviews and LLM answers. Record which domains are cited. Flag gaps where you appear in one but not the other. Build a counter-plan per gap.

- Rebuild page architecture. Roll out standard templates with definitions, steps, tables, and TL; DRs (too long; didn’t read) across your pillars. Add schema and FAQ blocks where appropriate. Track reuse of your blocks in media and community posts.

- Concentrate your expertise. Pick 3–5 subtopics to dominate. Publish calendars, comparison matrices, troubleshooting guides, and glossary entries. Tie each asset to an expert with media availability.

- Revamp PR targeting by AI influence. Build a list of the publishers and networks most cited in your vertical and by the AI systems that matter to your audience. Prioritise recurring columns, data features, and expert Q&As with those outlets.

- Design for syndication. Package your research with clean charts, embed codes, and quotable stat blocks. Offer regional cuts to increase local pickup. Track pickups and reprints to quantify your “statistical gravity.”

- Operationalise freshness. Set a 90-day update cadence for core pillars and a 30-day cadence for fast-moving stats. Publish change logs and repitch material updates to your priority publishers.

- Measure what matters. Beyond traffic, track:

-

- Mentions and links from your priority publisher list

- Citation presence in AI Overviews and LLM answers

- Reuse of your phrasing in third-party writeups

- Time-to-pickup for press releases and data stories

Why we care

Search is now two games at once. Google’s AI Overviews reward established authority signals; LLMs reward depth, clarity, and structured expertise. AI media partnerships tilt the field further by elevating certain publisher networks into the model’s “trusted memory.”

If you don’t intentionally show up in those ecosystems, with machine-friendly content and targeted earned media, your brand will be underrepresented in generative answers. The fix is practical: standardise your on-site structure, concentrate vertical depth, and point your PR at the publishers’ AI systems that lean on. Start now while the overlap is small and the opportunity to shape model memory is still wide open.

Stay In The Know

Cut the clutter and stay on top of important news like this. We handpick the single most noteworthy news of the week and send it directly to subscribers. Join the club to stay in the know…